Leveraging LLMs for Smarter Taxonomy Interactions

This article by XBRL International Guidance Manager Revathy Ramanan is based on her presentation at Data Amplified 2024. You can also watch the original video here.

In previous blogs, I’ve explored how we can leverage large language models (LLMs) like ChatGPT to significantly facilitate and enhance the analysis of XBRL reports, including both numeric data and narrative disclosures. These tools allow users to make natural language queries, removing the need for coding expertise. By providing LLMs with XBRL data, allowing these models to understand the structure of the data and tap into taxonomy metadata, we can achieve deeper and more accurate insights than using traditional unstructured formats such as PDFs.

In this article, I will leave behind end-user analysis – for now – to consider how the capabilities of LLMs can be utilised to create smarter, more interactive XBRL taxonomy experiences. This piece is particularly aimed at taxonomy authors and software vendors designing taxonomy navigation experiences within their tools. Let’s dive in!

Improving Label Clarity and Consistency

Defining labels in a taxonomy can be tricky and time-consuming. It’s not just about following rules—making labels clear, concise, and consistent often requires judgment that standard automation tools can’t easily replicate. This is where LLMs can offer useful support, helping taxonomy authors fine-tune label definitions with more ease and accuracy.

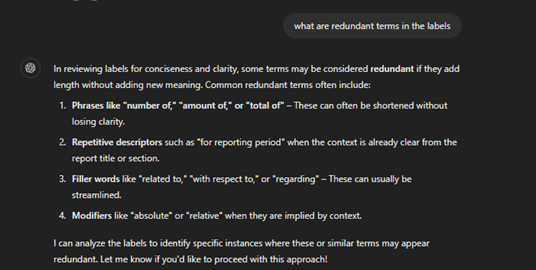

I provided ChatGPT with a list of standard labels in spreadsheet form and asked it to identify redundant words. The results were insightful: it flagged phrases such as “amount of total,” “for the reporting period,” and “related to” as examples of unnecessary verbosity.

For instance, the phrase “reporting period” is frequently used in labels but is highly likely to be redundant, since all elements in the taxonomy inherently relate to a reporting period. By eliminating such phrases, taxonomy authors can enhance clarity without sacrificing meaning. Using LLMs for such queries gives useful clues to taxonomy authors when refining labels.

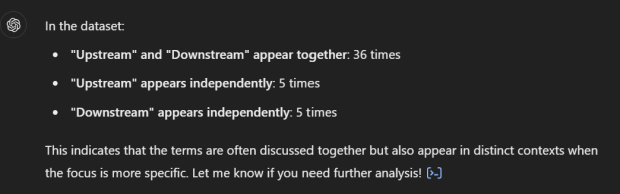

Beyond simple redundancy detection, LLMs can analyse label patterns to improve consistency. I asked ChatGPT to examine standard labels from a sustainability reporting taxonomy containing the term “value-chain.” It recognised that these labels often contained the terms “upstream” and “downstream,” frequently appearing together.

In sustainability reporting, the term value-chain is normally considered to encompass both upstream and downstream activities. Standard labels, designed to concisely capture each reporting concept, do not need to state this every time; they only really need the terms “upstream” or “downstream” when identifying only upstream or downstream impacts. The pattern spotted by ChatGPT suggests that some standard labels are unnecessarily incorporating both terms, and that they could instead be included in the documentation label, which provides a longer description. Such insights help authors rethink label construction, ultimately leading to a more structured and standardised taxonomy.

On-the-Fly Quality Checks

Another useful application of LLMs is real-time rule-based taxonomy quality checks. For instance, taxonomy authors can quickly verify whether all presentation trees start with an abstract element, ensuring adherence to best practices. Authors’ taxonomy review process can be complemented by integrating LLM-driven quality checks, ultimately improving taxonomy integrity.

Simplifying Formula Interpretation with LLMs

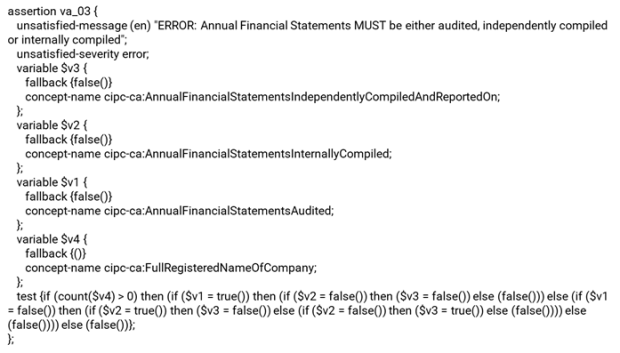

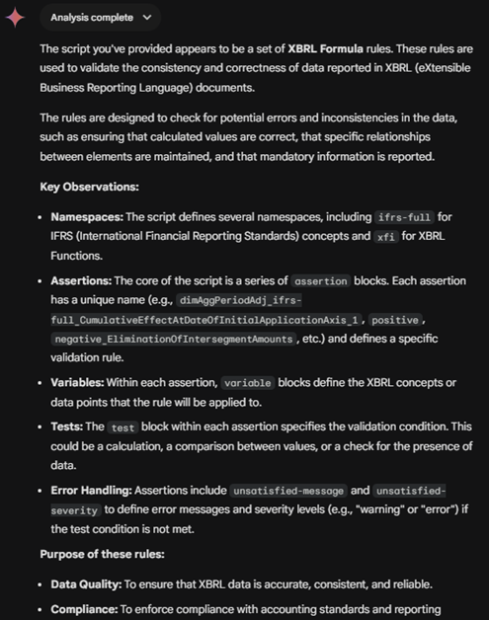

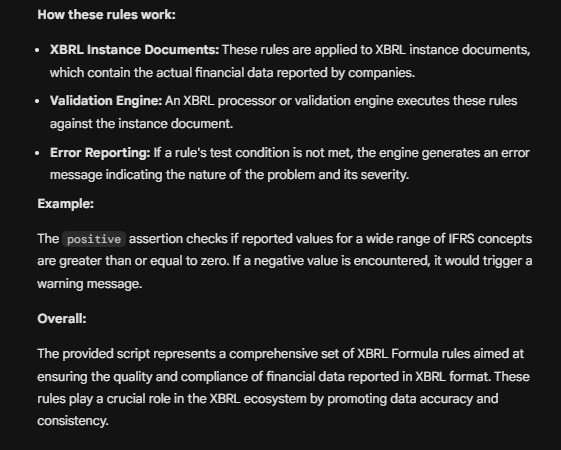

Formula rules are a key feature of XBRL. They offer a standardised mechanism for embedding validation rules within an XBRL taxonomy, and play a crucial role in ensuring data quality. However, one common criticism is that these rules can be difficult for humans to interpret. While text-based XBRL Formula (XF) offers a more friendly syntax, it can still feel technical and complex.

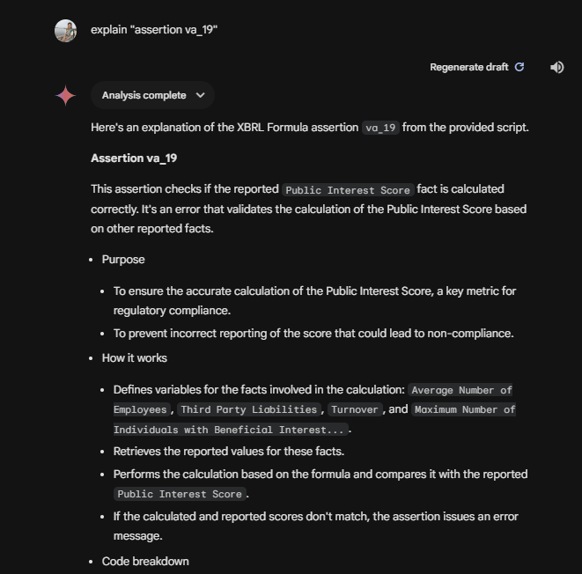

LLMs excel at interpreting code, making them valuable tools for decoding formula logic. For this experiment, I am using Google Gemini to showcase that different available tools can be used for these types of tasks. I provided Gemini with XBRL Formula rules in XF, asking it to explain their structure. The model effectively identified key patterns and summarised the rules in a clear, business-friendly manner.

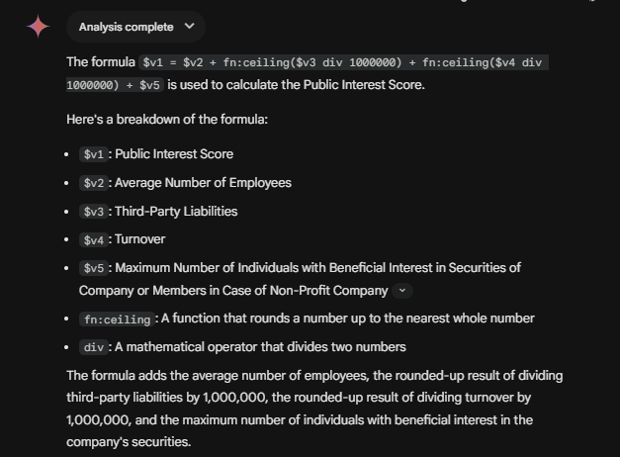

To take this further, I provided a specific formula for calculating a Public Interest Score. Gemini broke down the variables and explained the logic in simple terms, making the rule understandable even to non-technical users.

These experiments suggest a promising use case for LLMs in taxonomy rendering tools, where they could act as on-demand interpreters for complex formulas.

Traditionally, taxonomies are navigated using hierarchical structures, requiring users to drill down through nesting levels to understand relevant concepts. While effective, this approach can be cumbersome when seeking to understand disclosures in context.

What if LLMs could offer a more intuitive way to explore taxonomies?

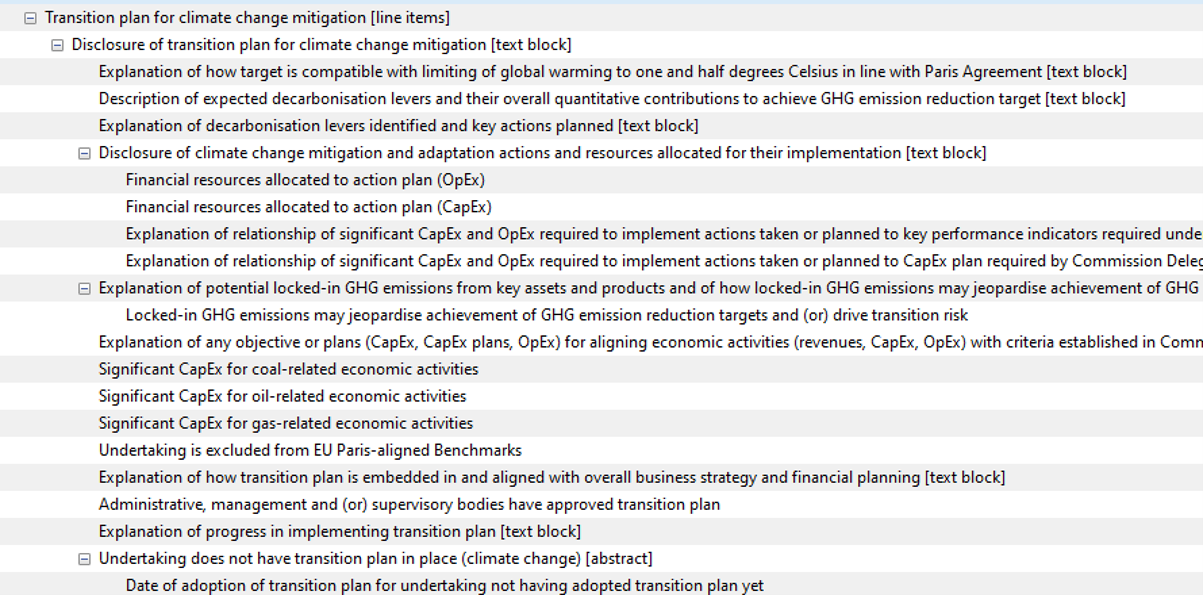

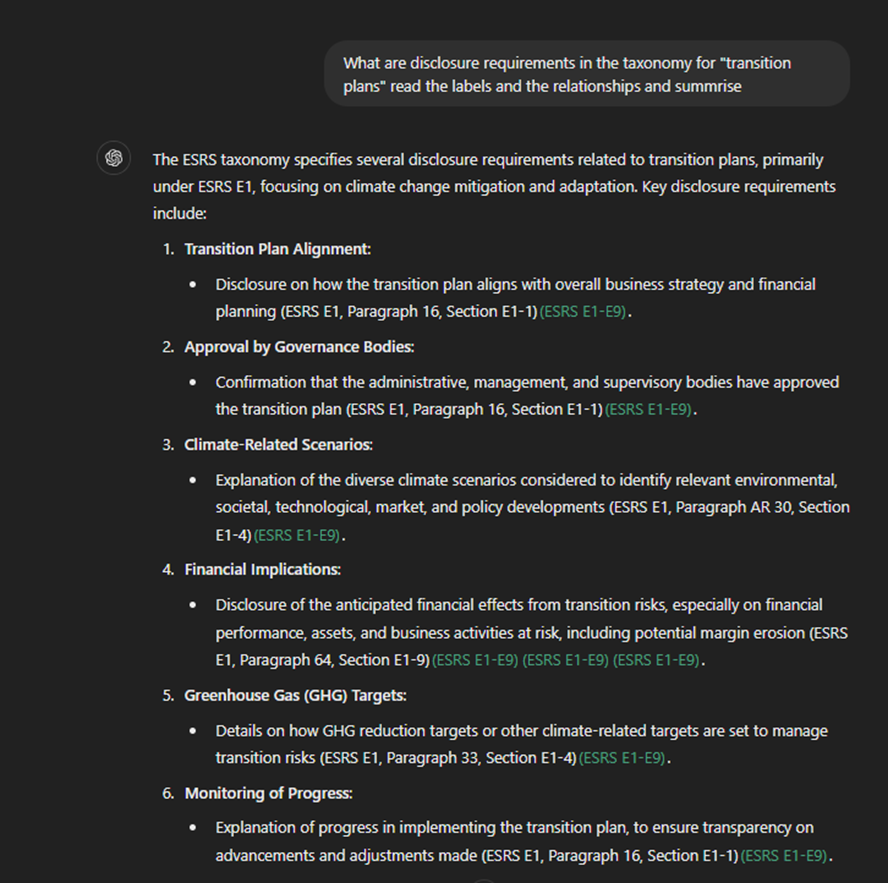

To explore this, I provide ChatGPT an extract of the ESRS taxonomy in JSON format embedded in the Inline XBRL Viewer of the sample iXBRL report provided by EFRAG. The model includes concept properties, references, labels, presentation and calculation relationships.

I asked ChatGPT to search for disclosures related to “transition plans.” At my request, instead of merely listing concepts, it read the taxonomy, identified relevant items and information, and summarised them in a business-friendly manner. It provided context around the disclosure present in the taxonomy.

This approach could offer a more natural way to explore and understand taxonomies—making the experience more user-friendly. The idea isn’t to get rid of the hierarchical approach but to use LLMs for smarter navigation. LLMs can help make taxonomy structure easier to grasp, showing how concepts connect within the hierarchy and highlighting other relevant ideas that might be of interest.

This approach could offer a more natural way to explore and understand taxonomies—making the experience more user-friendly. The idea isn’t to get rid of the hierarchical approach but to use LLMs for smarter navigation. LLMs can help make taxonomy structure easier to grasp, showing how concepts connect within the hierarchy and highlighting other relevant ideas that might be of interest.

It might just be time to rethink how taxonomy navigation works.

Final Thoughts

The integration of LLMs into taxonomy workflows presents exciting opportunities for label refinement, taxonomy review, formula interpretation, and navigation improvements. These capabilities could enhance efficiency, improve clarity, and provide business users with a more intuitive way to engage with taxonomies.

While there’s still much to explore—and there may well be further applications to uncover—one thing is clear: LLMs are not just tools for automation but intelligent assistants that can support the evolution of taxonomy design and usability.